Introduction

Part 2 of this virtual production blog series studied the four main types of VP as defined by Epic Games2. The blog further explored alternative unique ways industry and independents are taking advantage of real-time VP technologies. This blog will identify why motion capture is a crucial element in the VP process. It will explore methods of motion captures, view problems and solutions, and explore leaders in motion capture technology.

Obi-Wan Kenobi (2022).Image from ILM

I like the way you move!

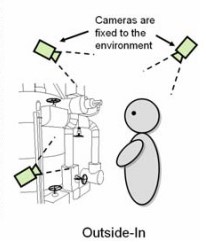

Motion capture is the linchpin of virtual production technology because it enables the translation of physical movement in the real world to digital movement in the virtual world. This is especially important in a real-time setup because it would be an impossible feat to animate camera and/or human movements in real-time. Motion capture makes translation of movements of objects and people possible in real-time.

The Mandalorian (2022).Image from Vicon.

If you want a virtual camera to follow the movements of the physical camera in real-time, you will need motion capture technology to do so. The same is true for converting any real-world movement to digital movement in real-time.

The Mandalorian (2019)

Vicon GDC (2022)

Mocap methods: Inside or outside?

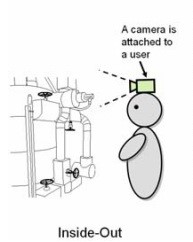

There are two main options when it comes to capturing motion, inside-out tracking and outside-in tracking.

Inside-out tracking:

Inside-out tracking refers to tracking objects and/or people by placing sensors on the objects/person to track the movements. The tracking is achieved by the sensors ‘looking outwards’ to survey the environment and calculate positional changes1. The type of sensors varies depending on application, however, the most used sensors for inside-out tracking include cameras, infrared (IR) lasers, and inertial measurement units (IMU). For virtual production IMU sensors are the most common component for inside-out tracking.

Inside-Out vs Outside-in. Image from displaydaily.

Inside-Out Performance capture Example:

Outside-in tracking:

Outside-in tracking refers to a tracking approach where objects and/or people are tracked by placing sensors (generally cameras) around a designated capture area and pointing the sensors to ‘look in ’at the space to create a defined area for motion tracking1. This tracked area is referred to as a capture ‘volume’ and can be achieved by using a variety of different sensors, however, most volumes use optical motion capture cameras6.

Inside-Out vs Outside-in. Image from displaydaily.

Outside-in Performance capture Example:

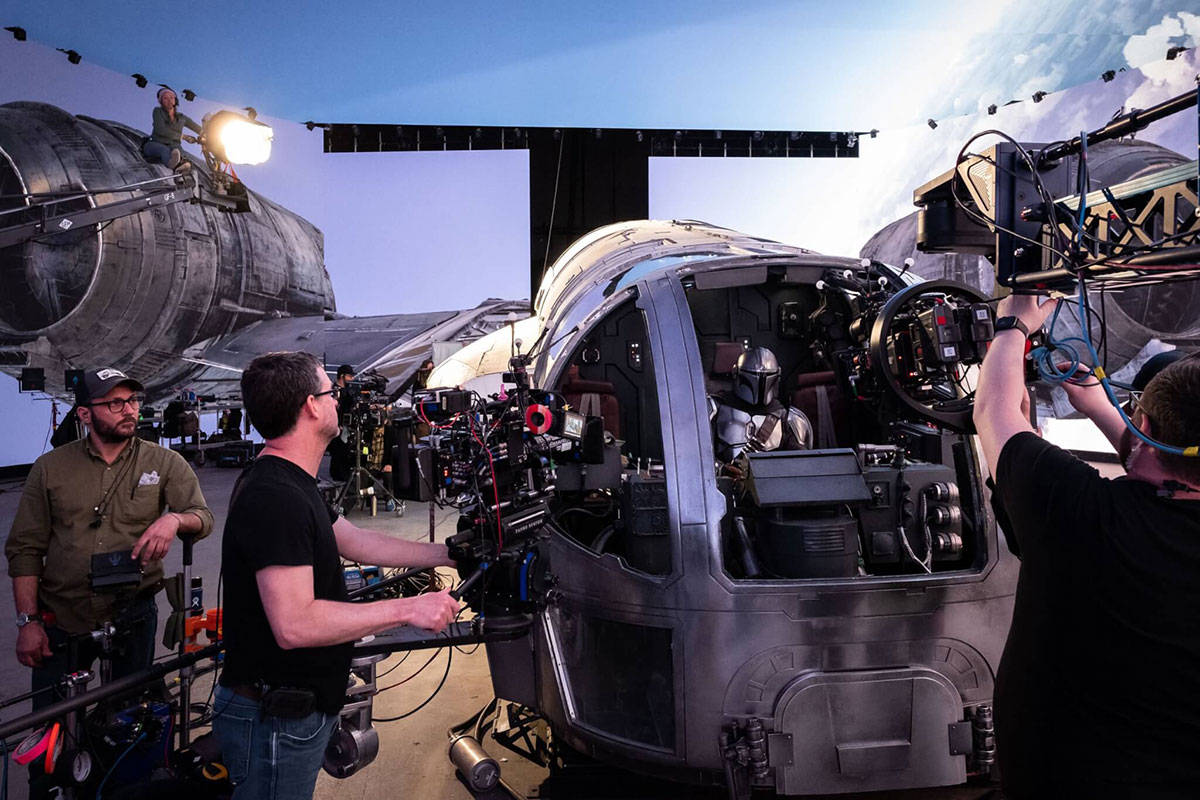

Big-budget virtual production projects almost exclusively utilizes an optical motion capture system to track the motion of cinema cameras, objects, and people. These motion capture systems work by placing reflective motion capture markers (passive markers) onto any object/person in the volume. The motion capture cameras see these markers by emitting infrared light into the volume which is reflected by the marker and seen by multiple cameras to calculate its position in 3D space in real-time.

Example of outside-in tracking with passive markers:

Performance capture demo.Image from Logemas.

What’s better?

Both types of tracking have their strengths and weaknesses depending on use case. For large productions the clear choice is outside-in tracking because it is significantly more accurate in terms of exact world positions of the objects. Inside-out tracking has less positional accuracy because there is no validation of exact world position3. This is especially the case with IMU based tracking systems because they suffer heavily from drift. Drift refers to the accumulation of small errors in the accelerometer and gyro measurements which overtime causes characters or objects to virtually ‘drift’ away from where their physical counterparts are in the world3,5.

For ICVFX specifically this is an unavoidable concern because the camera needs to be tracked accurately to maintain a consistently accurate parallax across a filming day. These issues generally relegate inside-out tracking to indie and smaller budget virtual production projects.

The Mandalorian (2022).Image from Vicon.

One weakness of outside-in tracking is occlusion of the motion capture markers. Occlusion is defined as the state of being closed, blocked, or shut4. Simply put in terms of motion capture, a marker is considered occluded from a camera when an object or person blocks the motion capture camera from ‘seeing’ the marker. Markers being occluded from singular cameras are usually not an issue because motion capture systems consist of many cameras, making it likely that additional cameras will still see the marker. Occlusion only becomes an issue when less than three cameras see the marker because triangulation of the marker by three cameras is generally required for accurate reconstruction of that maker in 3D space.

Punch occlusion in the face!

Physical/hardware solution:

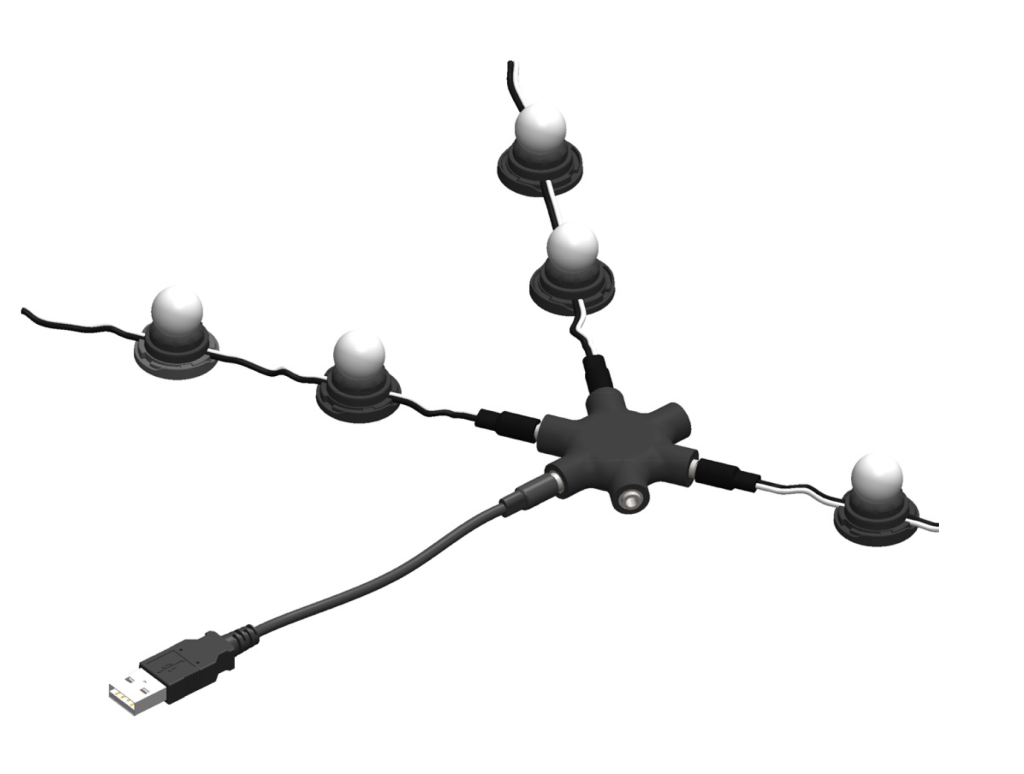

The problem of occlusion has been tackled in some ingenious ways by motion capture providers. Physically, motion capture markers have been developed with a spherical shape and placed in a position to elevate them slightly away from a tracked object/person. This method allows for a greater degree visibility of the markers by the motion capture system reducing occlusion significantly. Additionally, ‘active markers’ are now being implemented to increase visibility for objects. Passive markers are markers that simply have reflective tape/paint on them, whereas active markers are markers with inbuilt LED’s which are more visible to the cameras.

Active Marker example:

Finally, ever increasing hardware accuracy and resolution of motion capture cameras is greatly increasing the distances motion capture cameras can see markers from. Vicon’s latest Valkyrie camera range boasts cameras with up to a 26-megapixel sensor8.

Software Solutions:

Alongside hardware developments mocap there has been cleaver software methods for tackling occlusion. Vicon’s Shogun software supports rigid body tracking modes to allow for quick booting of objects and the ability for objecttracking with multiple markers completely occluded. This is technically achieved by virtually linking multiple markers together to form a rigid object. If a marker (or multiple markers) on that object become occluded, the software will calculate where they should be based on the remaining markers.

Like the rigid object tracking, the Shogun software does a similar methodology for human tracking. This is done by linking segments of markers on a human together to calculate where body components should be if markers become occluded. Vicon coins this method as its ‘unbreakable solving’.

Unbreakable solving:

Why not have both?

With the increased use of camera tracking in ICVFX workflows camera crowns are becoming increasingly used for camera tracking. Camera crowns are devices that are mounted to a cinema camera that have active markers integrated for camera tracking. Vicon is pioneering the next iteration of active crowns by integrating inside-out and outside-in object tracking. Vicon’s latest active crown integrates visions outside-in tracking system with an IMU in the sensor in the crown. This innovation allows for seamless switching of outside-in to inside-out tracking should all the crown markers be occluded during a capture.

SIGGRAPH 2022 – Vicon Crown Camera Tracker:

The best in the business

Every virtual production method outlined in this three-part blog post relies on accurate tracking of performers, cameras, or other objects with motion capture to translate data into the digital world.

Vicon motion capture has been the dominant pioneer of motion capture technology in the virtual production space because of their camera accuracy and integration with ILM. This is evident with ILM’s virtual production developer Rachel Rose “Since day one Vicon has enabled us to do things that were never possible before — and that’s as true today as it was in the 90s. Vicon’s technology and hardware have constantly advanced throughout our relationship, and the processing power available to us with their technology is like no other. We can deploy and always count on Vicon’s tech as it’s such reliable, robust hardware requiring only a quick calibration”7.

Final thoughts:

Virtual production has been the amalgamation of technology and creativity. The variety of virtual production storytelling methods will be ever evolving as real-time technology improves. The explosion of virtual production into mainstream filmmaking has aided in putting creative decisions into the hands of creatives earlier in the production process. No longer do creatives have to wait until deep into postproduction to realises outcomes. This is an exciting time for filmmaking because using technology that allows filmmakers to have greater control over final pixel decisions earlier in production will make for greater immersive storytelling.

References

- Display Daily. (2018). CTA 2069 and Other Words for VR, AR and MR. Retrieved 13 December, fromhttps://www.displaydaily.com/article/display-daily/cta-2069-and-other-words-for-vr-ar-and-mr

- Kadner, N. (2019). The virtual production field guide volume 1.Unreal Engine. Retrieved 13 September 2022, from https://cdn2.unrealengine.com/vp-field-guide-v1-3-01-f0bce45b6319.pdf

- Kaichi, T., Tsubasa, M., Mitsunori, T., & Hideo, S. (2020). Resolving Position Ambiguity of IMU-Based Human Pose with a Single RGB Camera. Retrieved fromhttps://doi.org/10.3390/s20195453

- Oxford Dictionary. (2022). Occlusion. Retrieved 13 December, fromhttps://www.oxfordlearnersdictionaries.com/us/definition/english/occlusion?q=occlusion

- Oxts. (2020). Inertial navigation: Drift. Retrieved 13 December, fromhttps://www.oxts.com/ins-drift/#:~:text=Drift%20is%20the%20term%20used,become%20more%20and%20more%20inaccurate

- Unreal Engine. (2022). Vp glossary. Retrieved 12 September 2022, fromhttps://www.vpglossary.com/

- Vicon. (2021). Vicon x ilm. Unreal Engine. Retrieved 12 September 2022, fromhttps://www.vicon.com/resources/case-studies/vicon-x-ilm/

- Vicon. (2022). Valkyrie. Retrieved 12 September 2022, fromhttps://www.vicon.com/hardware/cameras/valkyrie/