Introduction

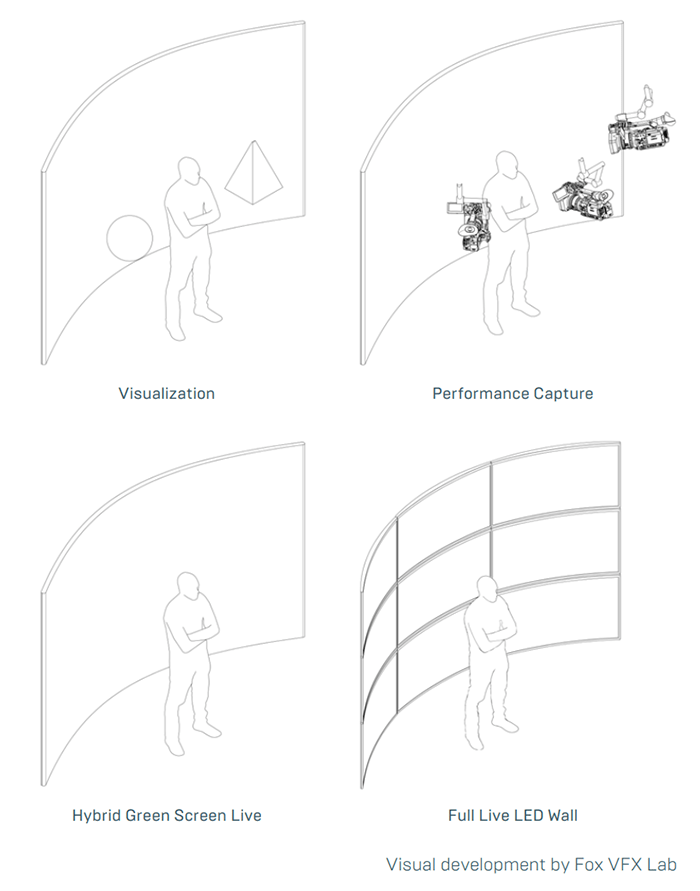

Part 1 introduced the most well-known method of VP and how LED panels are used for a real-time output. ICVFX is the most known version of virtual production, however, it is not the only type. Epic Games defines four main types of virtual production:

What all these methods have in common is the real-time interaction of storytellers and technology.

Visualisation

Visualisation virtual production describes filmmakers engaging with virtual environments and/or character assets (often unfinalized) to aid in visualisation of the project. This visualisation has a multitude of use cases from aiding in pitching a project, to doing virtual location scouting, to assisting the stunts team in visualising the outcome of stunts3.

The advantage of using virtual production for visualisation is that key creatives and technicians on a production can quickly experiment and/or pre-visualise what the end results are likely to look like. This assists in orienting everyone on a production towards a common outcome.

Performance Capture

Performance capture refers to motion capture workflows of virtually tracking the movements of real-life performers, cameras, or other objects for translation into the digital world3..A major push in the innovation of performance capture was James Cameron who “wanted to direct live actors on the mocap stage but view their performances in the Pandora environment”9..

Virtual production performance capture workflows can differ between the virtual and real world integrating. Some projects remain fully digital, and others feature integration of digital and real-world elements.Performance capture workflows can be further broken down into differing roles of motion capture, full performance capture, facial capture, and full-body animation3..

Quick Define: Motion capture refers to any process where real world objects are tracked on a stage and fed into the virtual world to control digital assets.

- Facial capture refers to using motion capture technology to track facial data and translate the data to digital characters.

- Full-body animation capture refers to tracking fully body movements of performers and retargeting the animations to digital characters and/or creatures.

Examples of performance capture with real world and digital world integration is Taika Waititi being tracked with motion capture to become Korg standing next to Thor (Chris Hemsworth) during Thor: Love and Thunder (2022).

Side note: Marvel films VFX are provided by ILM, who now use Vicon tracking in all their big budget projects on several stages around the world. Thor: Love and Thunder was filmed in Sydney, with a Vicon system provided by yours truly! Logemas!

Thor: Love and Thunder (2022).Image from ILM.

Thor: Love and Thunder (2022).Image from ILM.

Finally, there are other unique use cases where only camera tracking is used for fully animated productions. The Lion King is an example of this where the characters were hand animated, however, the camerawork was digitally tracked using motion capture to create realistic camera movements6

The Lion King (2022).Image from fxguide.

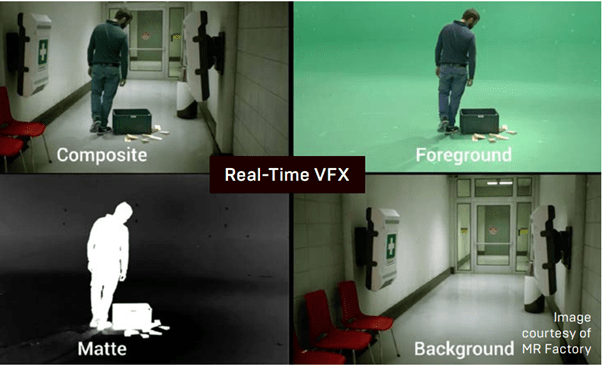

Hybrid Green Screen Live

Hybrid Green Screen Live refers to hybrid chroma key compositing where elements are shot with green screen and composited into a virtual environment. This production method can have either have a Real-time hybrid virtual production method where composition results are feedback live, or a post-produced hybrid virtual production where live green screen compositing is a reference for blocking.

An example of hybrid green screen live from Logemas.

Full Live LED Wall (ICVFX).

ICVFX is a virtual production method in which a traditional VFX blue or green screen is replaced with LED panels to play a virtual background in real-time.See Part 1 for more.

Thor: Love and Thunder (2022).Image from ILM.

There couldn’t possibly be even more?!?!

These additional types of virtual production relating to film production does highlight the complexity of defining virtual production. There are so many angles that filmmakers can take to interact with real-time technology to get creative outcomes.

While virtual production primarily refers to using real-time technology in a filmmaking context, the technology is being used in a multitude of ways. “Virtual event production” is a term being used for events held using the technology5, for example; virtual concerts, fashion shows, broadcast integration, virtual art exhibitions, and more5. Within the video game Fortnite the Rapper Travis Scott performed the first live virtual concert in the game5.

Twitch streamers have even started to use full-body and facial performance capture to interact with their fanbase in real-time. One example is a virtual cyborg streamer named Zero from Nexus.Zero became popular after videos of him interacting with people on the internet went viral on TikTok. The performer hides his real-life identity causing his followers to only know him as the digital character: Zero.

Zero from Nexus. Image from Virtual Humans.

It is evident that filmmakers pushing for the advancements of virtual production technology has paved the way for a multitude of unique use cases. The introduction of VP techniques during film production has revolutionised the way creatives think of making content. This has generated ripples of creative engagement of real-time technology within the film industry and outside of it.

In the next blogpost we will take a deeper look into why motion capture plays such an important role in virtual production workflows.

References

- Gale, A. (2022). Kids Ask Questions To Crush From ‘Finding Nemo’ in Video Seen 28M Times, Newsweek. Retrieved 28September 2022, from https://www.newsweek.com/kids-ask-questions-crush-finding-nemo-video-seen-28m-times-1715461

- Hogg, T. (2019). Weta and thanos come full circle in ‘avengers: endgame’. Awn. Retrieved 27September 2022, from https://www.awn.com/vfxworld/weta-and-thanos-come-full-circle-avengers-endgame?fbclid=IwAR15nco4YPZHn-wNEfln-0tQ3xTo1IeWdoWWV56YRkHbpgvna0hUuROO9so

- Kadner, N. (2019). The virtual production field guide volume 1.Unreal Engine. Retrieved 13 September 2022, from https://cdn2.unrealengine.com/vp-field-guide-v1-3-01-f0bce45b6319.pdf

- Kadner, N. (2021). The virtual production field guide volume 2.Unreal Engine. Retrieved 13 September 2022, fromhttps://cdn2.unrealengine.com/Virtual+Production+Field+Guide+Volume+2+v1.0-5b06b62cbc5f.pdf

- Rao, N. (2021). What is virtual production?.Cg spectrum. Retrieved 12 September 2022, from https://www.cgspectrum.com/blog/what-is-virtual-production

- Summers, N. (2019). Inside the virtual production of ‘the lion king’.engadget. Retrieved 15 September 2022, from https://www.engadget.com/2019-07-29-lion-king-remake-vfx-mpc-interview.html#:~:text=Save%20for%20one%20shot%2C%20the,placing%20markers%20on%20live%20animals.

- Unreal Engine. (2022). The future of filmmaking is here. Unreal Engine. Retrieved 12 September 2022, fromhttps://www.unrealengine.com/en-US/virtual-production

- Vicon. (2021). Vicon x ilm. Unreal Engine. Retrieved 12 September 2022, fromhttps://www.vicon.com/resources/case-studies/vicon-x-ilm/

- Wetafx. (2022). Virtual production is where the physical and digital worlds meet. Retrieved 14 September 2022, fromhttps://www.wetafx.co.nz/research-and-tech/technology/virtual-production/